Using "Verboten" Property Names in Custom Controls

Sun Nov 02 09:46:28 EST 2014

In an attempt to save you from yourself, Designer prevents you from naming your custom control properties after SSJS keywords such as "do" and "for". This is presumably because a construct like compositeData.for would throw both a syntax error in SSJS and the developer into a tizzy. However, sometimes you want to use one of those names - they're not illegal in EL, after all, and even SSJS could still use compositeData['for'] or compositeData.get("for") to access the value.

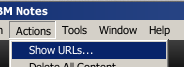

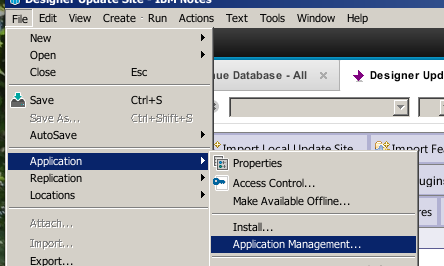

Fortunately, this is possible: if you go to the Package Explorer view in Designer and open up the "CustomControls" folder of your NSF, you'll see each custom control as a pair of files: an ".xsp" file representing the control markup and an ".xsp-config" file representing the metadata specified in the properties pane, including the custom properties. Assuming you attempted to type "for" for the property name and were stuck with "fo", you'll see a block like this:

<property> <property-name>fo</property-name> <property-class>string</property-class> </property>

Change that "fo" to "for" and save and all is well. You'll be able to use the property just like you'd expect with a normal property, with the caveat above about how to access it if you use SSJS. I wouldn't make a habit of using certain keywords, such as "class", but "for" is perfectly fine and allows your controls to match stock controls such as xp:pager.

This came up for me in one of the controls I like to keep around when dealing with custom renderers: a rendererInfo control to display some relevant information. Since I keep forgetting where I last used such a control, I figure I should post it here partially for my own future reference.

<?xml version="1.0" encoding="UTF-8"?>

<xp:view xmlns:xp="http://www.ibm.com/xsp/core">

<table>

<tr>

<th>Client ID</th>

<td><xp:text><xp:this.value><![CDATA[#{javascript:

var comp = getComponent(compositeData['for']);

return comp == null ? 'null' : comp.getClientId(facesContext);

}]]></xp:this.value></xp:text></td>

</tr>

<tr>

<th>Theme Family</th>

<td><xp:text><xp:this.value><![CDATA[#{javascript:

var comp = getComponent(compositeData['for']);

return comp == null ? 'null' : comp.getStyleKitFamily();

}]]></xp:this.value></xp:text></td>

</tr>

<tr>

<th>Component Family</th>

<td><xp:text><xp:this.value><![CDATA[#{javascript:

var comp = getComponent(compositeData['for']);

return comp == null ? 'null' : comp.getFamily();

}]]></xp:this.value></xp:text></td>

</tr>

<tr>

<th>Renderer Type</th>

<td><xp:text><xp:this.value><![CDATA[#{javascript:

var comp = getComponent(compositeData['for']);

return comp == null ? 'null' : comp.getRendererType();

}]]></xp:this.value></xp:text></td>

</tr>

<tr>

<th>Renderer Class</th>

<td><xp:text><xp:this.value><![CDATA[#{javascript:

var comp = getComponent(compositeData['for']);

var renderer = comp == null ? null : comp.getRenderer(facesContext);

return renderer != null ? renderer.getWrapped().getClass().getName() : 'N/A'

}]]></xp:this.value></xp:text></td>

</tr>

</table>

</xp:view>